FaceApp, a new service for iOS and Android devices that uses machine learning to morph user photos, is proving that when it comes to download-driven apps, all press really is good press. The once-obscure, then-viral, now-embattled app is seeing continued, incredible success, but that success is coming as much as a result of controversy and online amusement, as appreciation for what it can do.

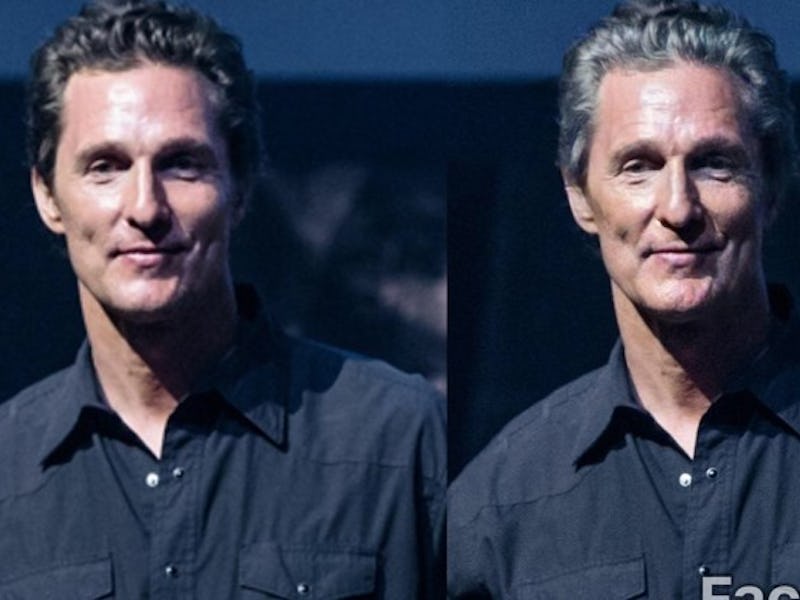

The app itself is simple enough in its concept: have an A.I. look at large numbers of photos of faces to learn the most reliable attributes of, say, smiling faces, or old faces. Then, this program can take new user-submitted photos and bring out certain features, even if those features are not present in the photo already — thus turning a frowny-face into a brilliant smile, or a young person into a geriatric. This is more sophisticated than just pasting on a fake mouth — it’s subtly layering outside facial details onto the cheeks, mouth, and eyes to make the new picture look more realistic.

At least, that’s the idea. Age filters seem to be getting the best response overall, while the smile is getting the worst. It also seems to have real trouble with non-standard faces. Just look at what it did to this poor, innocent baby.

People, in general, spend far more time trying (and succeeding) to break the app and produce funny or creepy results — but again, that preference is still leading to gobs of app downloads, if only to see how bad it makes them look. As of this writing, the app has been installed on between one and five million devices through the Android store, alone.

That’s pretty incredible, considering that people seem to be using the app primarily to do things like turn Mark Zuckerberg into … this.

And could this success continue in the face of accusations of racism?

It turns out that one of FaceApp’s other main filters is designed to make people look “hot” — and, like a slow-motion train wreck, the internet began to notice a pattern in these allegedly sexed-up selfies: the A.I. was reliably whitening people’s still in an attempt to make them look more attractive.

That’s the sort of CEO panic usually reserved for Travis Kalanik, but it’s becoming almost routine for executives at companies applying machine learning to user photos. While its abilities are nowhere near as sophisticated, Snapchat’s filters recently came under fire for creating racist imagery, and even for precisely the same conflation of hotness and lightness we see here.

Wireless Lab has since apologized for the filter and taken the totally inexplicable move of renaming it to “spark,” supposedly to remove all “positive connotations.” Since the name “spark” both a) specifically refers to the idea of hotness, and, b) doesn’t specifically mean anything, it seems incredible that the company didn’t opt to simply axe the filter, entirely.

They claim that the problem will get a “complete fix” soon, but what could that possibly mean? The team has been vague about the precise data-set that went into training this A.I., but it’s a safe assumption that it learned its idea of hotness from real human ratings. So, a “complete fix” would likely mean either artificially adjusting its output to be more socially acceptable, or actually going out into the world and fixing racism, then training a new hotness A.I. to accurately reflect this new reality.

It does seem like it would be easier to just nix the idea altogether, doesn’t it? But then, just how successful would FaceApp have been without all the hate, amusement, and widespread outrage?