Makers of the First "Electronic Person" Predicted Our Current Robot Debate

Don't conflate the future with the past.

Last week, a proposal to the European Parliament made international headlines by suggesting that Europe should recognize some robots and advanced artificial intelligence as electronic persons” with a special set of legal restrictions, and even some limited rights.

The new proposal is one of several aimed at creating the first legal framework for robots. It is also the most frivolous — a technologically ignorant and petulant attempt to protect human workers from the inevitable upheaval caused by increased automation. Much of the press covered it as a cutting-edge, “Welcome to the Future”-type deal, but it is, in fact, a piece of nostalgia. The social shift it predicts has been front of mind for roboticists and A.I. specialist for 50 years, since the creation of Shakey.

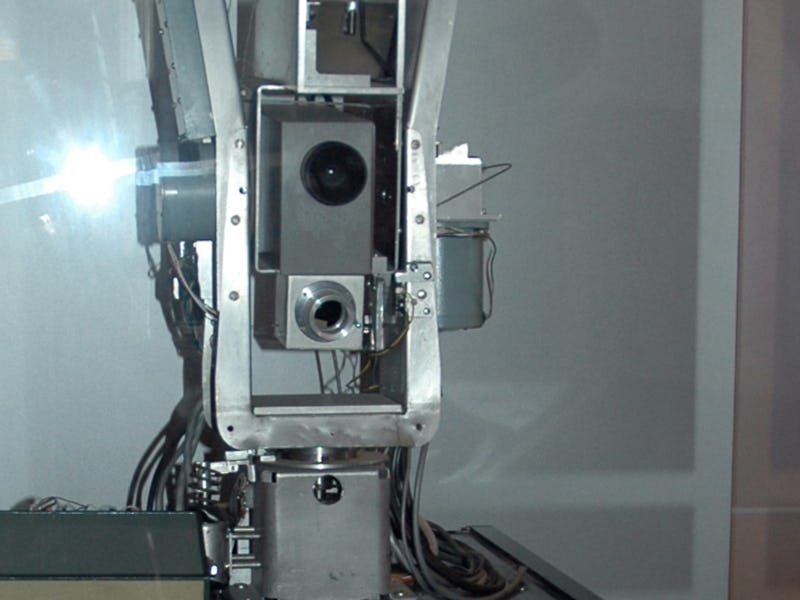

The term “electronic person” was first coined in a 1967 article for LIFE magazine profiling Shakey, a DARPA-funded robot that was very, very obviously not an “electronic person,” as was claimed by a Stanford researcher in the anecdotal lede. By the standards of the era, though, Shakey was incredible; “he” could look at floor patterns to navigate, find a specified object in 3D space, and move it to a new spot as requested. If presented with a very specific impediment to that goal, Shakey could employ one of very few solutions as needed, then continue with his job. Despite looking like an up-jumped overhead projector, he seemed to have an abstract understanding of the world, and the people around him began to talk like he was some new sort of life-form.

Even the greats were vulnerable to Shakey hype. Legendary A.I. researcher Marvin Minsky said that, “In from three to eight years, we will have a machine with the general intelligence of an average human being. I mean a machine that will he able to read Shakespeare, grease a car, play office politics, tell a joke, have a fight. At that point the machine will begin to educate itself with fantastic speed. In a few months it will be at genius level, and a few months after that its powers will be incalculable.” Given the longest possible timelines, this means Minsky was predicting we’d have a primitive version of Star Trek’s Data by 1975.”

The similarly magical thinking behind this EU proposal is evident from the first, frankly amazing, whereas clause: “Whereas from Mary Shelley’s Frankenstein’s Monster to the classical myth of Pygmalion, through the story of Prague’s Golem to the robot of Karel Čapek, who coined the word, people have fantasized about the possibility of building intelligent machines, more often than not androids with human features.” Nice.

Still, while this attempt to anthropomorphize robots is in the realm of heart-fluttery science fiction optimism, it’s worth noting that we really are starting to build the world Minsky and his cohorts were imagining. Shakey’s designers dreamed that he could be the basis for super-advanced game playing A.I. like AlphaGo, and autonomous space landers that explore alien worlds without constant direction from Earth — and while it may have taken half a century, humanity did eventually get that done. These researchers definitely misjudged the timelines for A.I. development — but when it comes to optimism, that was just the start. Minsky told LIFE, “The machine dehumanized man, but it could rehumanize him,” meaning that while the industrial revolution had turned much of work into machine-like drudgery, another great technological leap could liberate us from work, altogether.

Sound familiar? From the loom to the learning algorithm, automation has always been at least somewhat associated with the end of work — sometimes depicted as a laid-back utopia free from obligation and unnecessary stress, other times as a colorless existence devoid of passion and real human achievement. This new EU proposal definitely sees things through the latter of these two lenses, but at the end of the day it’s much more worried about how society might (or might not) continue to function after work. This is, essentially, the modern debate over “guaranteed minimum income.” Be sure to watch Canada’s attempt to test that idea over the next few years.

According to the EU draft report that motivated this proposed robot personhood, “The more autonomous robots are, the less they can be considered simple tools in the hands of other actors (such as the manufacturer, the owner, the user, etc.)… This, in turn, makes the ordinary rules on liability insufficient and calls for new rules which focus on how a machine can be held — partly or entirely — responsible for its acts or omissions.”

Robonaut, meet astronaut.

If this proposal is passed, and a robot punches you in the face, the legal response could literally end up being that the robot gets punished. How satisfying would you find that, versus a fine for the programmer who made the faulty face-punching subroutine in the first place? Anthropomorphizing robots is absurd at the best of times — but now we’re talking about, what, putting robots in jail? Executing them? It may be necessary to have provisions to forcibly yank a robot out of circulation and “retire” it, but if we do it should for protecting society, not punishing a very advanced inanimate object. Will there be a robot rehabilitation project, as well?

The other big problem for this proposal is that virtually every model for a self-driving insurance scheme puts at least some liability for an accident on the company or companies that made the car’s software, and its physical body. This is expected to be passed along to the consumer in the form of higher base auto prices, but ultimately it could also save money by lowering insurance rates. Most assume that autonomous vehicles will be the test case for robot liability in general, so there’s simply no reason to think that robot liability will be a major problem.

The reality is that we may end up having to accept laxer rules for robot liability, simply so the financial risks in developing and releasing autonomous technology doesn’t stop it altogether. That’s directly at odds with this largely incoherent proposal to the EU’s parliament, but looking at its structure, and as mentioned its whereas clauses, it’s clear that the real intent is to draw attention to its authors’ worries about the human rights impacts of the end of work, and the need to prepare new economic models to offset them.

Given the level of technical sophistication in modern democratic leaders, however, it’s totally possible they may just pass this nonsense, regardless.