Marvin Minsky: Read His 1961 Paper on Artificial Intelligence

Minsky helped to bridge the gap between thinking in humans and thinking in computers.

Before we had supercomputers and microprocessors, some geniuses were already thinking about the possibility of artificial intelligence. One of those pioneers, Marvin Minsky, died Sunday night in Boston of a cerebral hemorrhage. He was 88.

Minsky’s groundbreaking research, having began in the ‘50s, is enough to fill an entire book. (And it has — several, in fact.) His major contributions to the world of artificial intelligence research and development included helping to illustrate how common-sense reasoning skills could be applied to machine systems.

In other words: Minsky helped to bridge the gap between thinking in humans and thinking in computers.

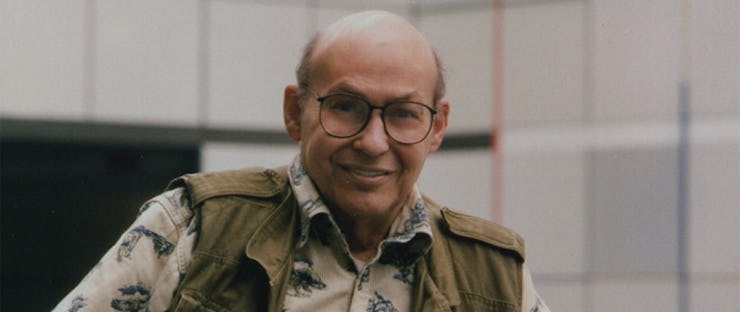

A photo of Minsky appeared on the MIT CSAIL website this week.

Minsky would help found the MIT Artificial Intelligence Lab (now known as the MIT Computer Science and Artificial Intelligence Laboratory) in 1959. Among the incredibly talented students under his tutelage were Ray Kurzweil, Gerald Sussman, and Patrick Winston.

So prescient was his work that Minsky, along with John McCarthy, is credited with having coined the term “artificial intelligence.” Nowhere is this more evident than in his 1961 paper — “Steps Toward Artificial Intelligence” — where Minsky outlines five essential skills for A.I.: search, pattern-recognition, learning, planning, and induction.

Minsky outlines how these processes could be mathematically constructed into a programmed language by which the machine could abide. But the bigger problem is getting a machine to understand how to react to a situation it has never directly experienced or dealt with:

“If a creature can answer a question about a hypothetical experiment, without actually performing that experiment, then the answer must have been obtained from some submachine inside the creature…Seen through this pair of encoding and decoding channels, the internal submachine acts like the environment, and so it has the character of a ‘model.’”

What Minsky hits on above is the ability for humans to reconstruct a simulation inside their heads and use that to predict a certain of outcomes based on the information at hand. A human being can then choose how to react accordingly so that the outcome is ideal.

The goal behind A.I., then, is to make a machine that can do the same thing, and operate based on an internal “model.”

If that seems a little abstract, here’s another way of understanding what Minsky believed A.I. should be. In 2008, he wrote a short essay about why people — especially children — find mathematics so difficult to learn.

He writes:

“The traditional emphasis on accuracy leads to weakness of ability to make order-of-magnitude estimates — whereas this particular child already knew and could use enough powers of 2 to make approximations that rivaled some adults’ abilities. Why should children learn only “fixed-point” arithmetic, when “floating point” thinking is usually better for problems of everyday life!”

In other words, it’s better for a student to learn about math not as a set of mechanical procedures that lead to a desirable solution, but as a broad system in which creativity and improvisation can be applied such that there are multiple methods that lead to a desired solution — or moreover, multiple solutions themselves.

That was Minsky’s biggest contribution to A.I. as well. We shouldn’t be developing smart machines that can consider solutions in a rigid, step-by-step way. Machines of the future should learn to function by inventing their own solutions to problems — the same way humans ought to. As he once put it: “You don’t understand anything until you learn it more than one way.”

Hopefully today’s and tomorrow’s A.I. researchers remember these words moving forward.