Microsoft Goes Precrime With an App That Predicts Recidivism

An algorithm will be sizing up the inmates. But can it improve prisons?

In a webcast with police departments earlier this year, a senior Microsoft programmer let slip that the company was deep into developing an app that can predict whether an inmate will be back behind bars six months after release.

“It’s all about software that has the ability to predict the future,” programmer Jeff King said.

The yet-unnamed app runs by analyzing the inmate’s history using — what else? — an intricate algorithm, one that King said is up to 91 percent accurate. A few examples of the factors King said would be considered: gang affiliation, participation in a rehab program, violations while serving their time, and hours in administrative segregation. It’s not all that far, in fact, from the factors parole boards consider when making determinations on whether to release inmates.

Those sound like reasonable predictors until you consider the inmates being evaluated may not have much choice in how they’ve spent their incarceration. As The Economist notes, prison gangs weren’t even a thing until the second half of the 20th century, when the incarcerated population exploded and life behind bars became so dangerous men signed up as a form of protection the guards were failing to provide. As for rehab, over the past decade cash-strapped states have slashed funding for those programs by hundreds of millions — California in 2011 notably cut $250 million from substance-abuse treatment, vocational training, and educational programs. That’s at least partly to blame for the rate of inmates who’ve taken a rehab program being much lower than it was 20 years ago. Putting the onus on inmates to prove themselves for how they adapt to prison while absolving the prison for policies more likely to increase recidivism is at best telling half the story.

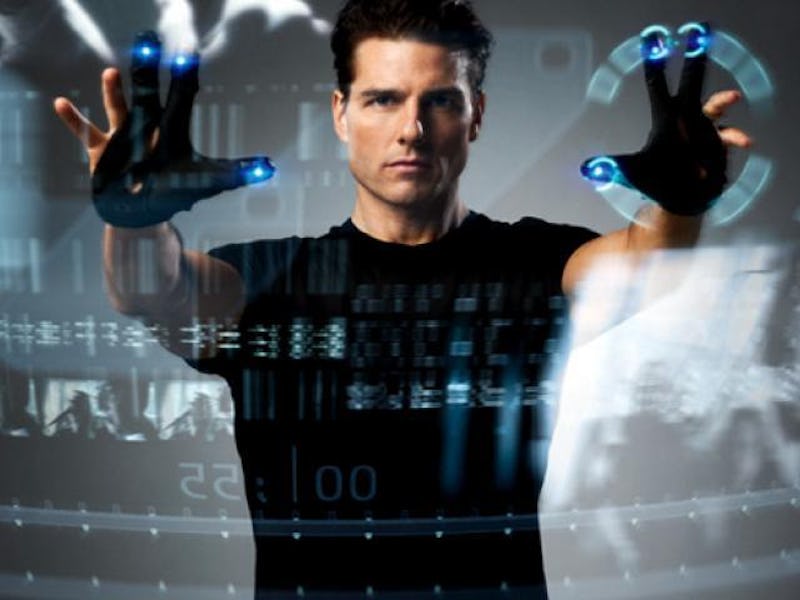

Then there’s the question of what we do with Microsoft’s Minority Report-worthy numbers. Would an ex-con get more social services to help keep him from re-offending? Would parole boards consider the algorithm’s opinion? Would a low score be used to justify longer prison sentences? What about justifying mandatory sentencing, a policy that historically has done a lot more harm than good.

When he broke Microsoft’s predictive plans on Fusion, Daniel Rivero wrote that King’s speech had “virtually gone unnoticed,” with about 100 views of the YouTube speech. (Good eye, Daniel.) Maybe it has gone under the radar because it’s just not that surprising anymore. Crime prediction algorithms, and arguments over their nuances and moral implications, are increasingly common. Japanese company Hitachi has half a dozen U.S. cities involved in a proof-of-concept test for a system the company says can help predict crimes by crunching the numbers on historical crime statistics, public transit maps, weather reports, and even social media. On the lower-tech end, Chicago is operating a “heat list” of potential offenders who get random police visits regardless of what they’ve been doing. And Los Angeles is considering an automated reader to scan the license plate of any car merely driving near an area frequented by prostitutes and sending a “Dear John” letter to the name on the vehicle registration. More and more, the best prediction we can give you is that your future is going to be watched and evaluated by an awful lot of alien intelligence. But hey, at least you’ll have a hoverboard.