New York Anarchists Are Fighting to Get Neo-Nazi Videos Off of YouTube

And they're using technology to do it.

A small, pink-haired woman approaches a man leaving the giant glass doors of an office tower in Manhattan’s Chelsea neighborhood.

“Hi, do you work for Google?” she says.

“Yes, I do,” he replies.

“We’re trying to get Google to take nazis off of YouTube,” she says. “YouTube is not enforcing its hate speech policy.”

She hands him a flyer, which he takes, smiling politely, before heading off to the next leg of his commute.

This is the polite tactic of the Metropolitan Anarchist Coordinating Council, known as MACC, a New York City collective that seeks “to seed resistance to an emboldened right-wing through an alternative, radical form of participatory politics,” according to their website. On a windy spring evening, around ten MACC members have gathered in protest at YouTube’s New York offices, a building it shares with its parent company, Google.

This peaceful demonstration is the latest step in MACC’s ‘No Platform for Fascism’ campaign. MACC members recently created the No Platform for Fascism Tool Kit, a downloadable browser plug-in for Chrome and Firefox that alerts users to hate speech-ridden videos on YouTube. The plug-in offers an easy, click-through method to reporting egregious videos that have been flagged by MACC’s research group, like “Join the American Nazi Party,” by ANPisforme, or a disturbing parody version of Eazy E’s “Boyz N Tha Hood” with lyrics that advocate for violence against ethnic minorities, citing “aryan rage.”

MACC says that despite an average of 272 reports per flagged video, only 18 have been taken down — and only nine of those by YouTube. They estimate that the remaining videos — they chose to target 30 initially — have a cumulative view count of over 1 million. It’s this lack of response that’s led anarchists from around New York to YouTube’s front door. Mostly in their twenties and casually dressed in earth tones and black, it’s somewhat difficult to tell who is who: the anarchists and the tech company employees.

The parallels aren’t lost on MACC, and possibly not on Google’s employees either, who for the most part are respectful, if not actively curious, about MACC’s literature.

“We believe that there are many people who work here who do not want nazi content on YouTube,” says Sarah, an organizer with MACC who works on the No Platform campaign. “And of course as workers they have access to different mechanisms to make that change from the inside.”

A pre-written complaint that pops up when reporting videos through the No Platform for Fascism plug-in.

MACC members wait outside of Google's Chelsea offices to offer literature to employees leaving work on Monday, April 16.

A Limited State on Curbing Hate Speech

It’s no secret that YouTube has a hate speech problem. After a ProPublica story in February detailed the inner world of Atomwaffen, a North American white supremacist group implicated in five murders since 2017, a media flurry erupted around how Atomwaffen was still using YouTube to spread its violent, white supremacist message — among other things.

“Atomwaffen produces YouTube videos showing members firing weapons and has filmed members burning the U.S. Constitution and setting fire to the American flag,” ProPublica writes in its report.

Initially, YouTube claimed it had done its due diligence by adding a warning label before content could be viewed on Atomwaffen’s channel. It also removed the ability to share, like, or comment on videos, and nixed the recommended videos tab.

“We believe this approach strikes a good balance between allowing free expression and limiting affected videos’ ability to be widely promoted on YouTube,” a YouTube spokesperson told The Daily Beast in February.

The policy is referred to around the internet as “Limited State,” and it’s the go-to for videos that tow the line between offensive content and flat out hate speech, according to YouTube. While the company’s hate speech policies forbid videos that promote violence or incite hatred against individuals or groups, the platform has also created this middle ground for contentious content that fits a specific description:

Some borderline videos, such as those containing inflammatory religious or supremacist content without a direct call to violence or a primary purpose of inciting hatred, may not cross these lines for removal.

This is what limited state looks like.

Amid mounting media pressure, YouTube did eventually ban Atomwaffen’s channel, but Limited State is used for a lot of the videos flagged by MACC, who don’t think the tactic goes far enough.

Sarah thinks that YouTube’s hate speech policies are really just meant to assuage advertisers. “Youtube created the Limited State specifically to appease advertisers so that ads wouldn’t show up to offensive videos, while also not angering their content creators,” she says. “We see it very much as a half measure, an appeasement, but it is not the same as taking it off.”

More Alt-Right, But Less IRL

Since the 2016 election and the rise of the alt-right in the public sphere, anarchists and Antifa have been coming out en masse to physically confront white supremacists intent on rallying and speaking in public. But especially in wake of the violence of Charlottesville, a massive pushback has made it harder for the alt-right to gather publicly. It’s been a positive development for MACC, who are accustomed to facing off against white supremacists IRL, but it’s also meant a change in battle tactics.

“That’s really when we started to shift our focus,” says Spark, a software engineer and a member of MACC who helped build the No Platform plug-in, who spoke with Inverse over the phone. “[We said] ‘Okay. Let’s look at where they are now. Go where they are. Stop their recruiting. Stop them being able to spread these hateful messages,’ [We] arrived at YouTube as being one of the more egregious ones that was possible to make a dent in, partially because YouTube does have a hate speech policy that these videos are violating, and they are just not enforcing their own hate speech policy.

“And then also, all these videos have millions and millions of views because YouTube itself is such a popular platform.”

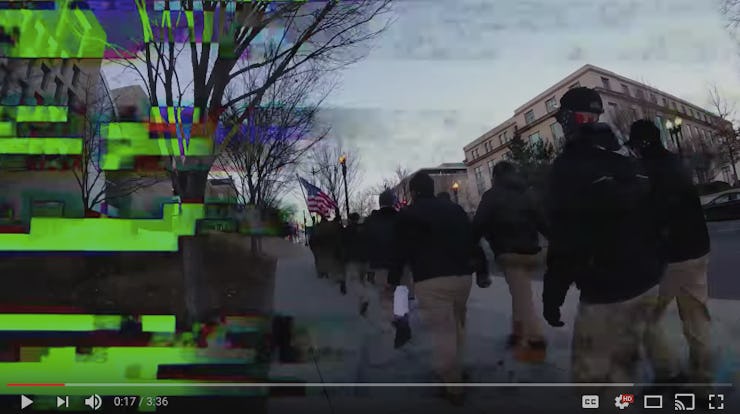

A screen shot of a YouTube video featuring members of the Rise Above Movement training — an organization based in Southern California that the Anti-Defamation League has called a white supremacist group.

The Weird World of Hate Speech on YouTube

For anyone who’s never thought to try, it’s a little shocking how easy it can be to find white supremacist content on Youtube. The Atomwaffen channel might be gone, but it took this writer about two minutes of searching “Atomwaffen” to land on another white supremacist channel. It featured a 50 minute, documentary-style piece of white pride propaganda. Titled, “Our Time Is Coming - NWF,” — seemingly created by the neo-nazi group the Northwest Front — the video was not in a limited state, and it had over 2,000 views.

Spark — who asked to use a pseudonym out of doxxing concerns — says that she had no idea how big of a problem neo-nazi content was on YouTube before she met up with MACC members to flag videos at a research party. “Before I went to that event, I had no idea how egregious it was,” she says. “I knew it was a problem, but then you see that there’s just a video that’s like, ‘Hitler was right…’ You know, it’s just really way more clearly stated (on YouTube) than I thought it was.”

The white supremacist content on YouTube can be as bizarre as it is prolific. “There was a whole genre we discovered, where they will take Disney songs, a lot of children’s songs, or just popular pop songs and change all the lyrics to be explicitly white supremacist,” Spark says. “That’s been the latest thing that we’ve been targeting is mainly these music videos, where it’s like they’ll sing Hakuna Matata and replace Hakuna Matata with ‘1488’ which is a nazi number.”

A spoof of the song 'Arabian Nights' from Aladdin features hateful lyrics about refugees.

Algorithms as a Recruiting Method

On top of being a hotbed for hate speech, Spark says there’s another reason to be concerned about YouTube as a platform for white supremacy: recruiting.

“Because of the recommendation algorithm, if someone starts to look at slightly right-wing things, or pick-up-artist things — which are also a big way in — they will kind of go down this rabbit hole and get into this really directly white-supremacist content,” she says.

As the sun begins to set and MACC members start running out of flyers, not everyone leaving Google’s large glass doors has been receptive to their efforts. “I don’t care,” one man says blankly, veering around a young girl with dramatic eye makeup as she tried to hand him a flyer.

MACC has also gotten radio silence from YouTube itself. Since they started their campaign four months ago, Sarah says that MACC has had no response from the platform, nor has the platform’s policies or level of enforcement changed according to the group, beyond a few videos flagged by MACC moving into limited state. Inverse reached out to YouTube to comment on this story, but has not yet received a response.

Between a Rock and a Grey Area

Curbing hate speech is a really complex challenge for social media behemoths like YouTube according to Jonathan Vick, the Associate Director of Investigative Technology and Cyberhate Response at the Anti Defamation League. Vick has been working with YouTube to get hateful content taken off the site for the better part of a decade. “They’ve certainly upped their game as of late,” he says, referring to limited state.

Vick says the sheer capacity of users on services like YouTube pose some of the biggest problems to curbing bad actors. “The amount of things posted in the course of an hour is astronomical. It’s beyond human capability to manage,” he says. “The technology has gotten ahead of us.”

Catching up could take a while. In testimony before Congress last week, Mark Zuckerberg said that it would probably take Facebook up to a decade to create algorithms sophisticated enough to flag hate speech across the site. Vick thinks YouTube could throw its hat in the ring. “They should be researching and investing in technology to try and head off this problem,” he says. “Absolutely; they have the resources, they have the brains, they have the time, and there’s a lot of work to be done.”

For now, defining prohibitable hate speech is increasingly becoming a sore point for all social media platforms. Reddit’s CEO Steve Huffman found himself in hot water in April for saying that it wasn’t Reddit’s responsibility to ban racist comments, placing the onus instead on community moderators. When it comes to policing words, both Huffman and Zuckerberg have argued that the complexity is rooted in context: how is something said, and by whom?

But when it comes to video, is there any context that justifies some of the content flagged by MACC, such as a tribute video to Atomwaffen, full of footage of masked men shooting guns and yelling about genocide? Spark doesn’t think so.

“Free speech does not obligate a business to promote or profit off of nazis and neo-nazis. YouTube does not need to, there’s nothing making YouTube allow this content on their platform.”