Facebook Needs to Make a Babelfish to Achieve Human-Level Intelligence

Douglas Adams' translating fish is inspiring researchers.

One of Facebook’s biggest technology goals is also one of the biggest in computing: How can a real-life human person speak to a device like Amazon Echo and get a logical answer in return?

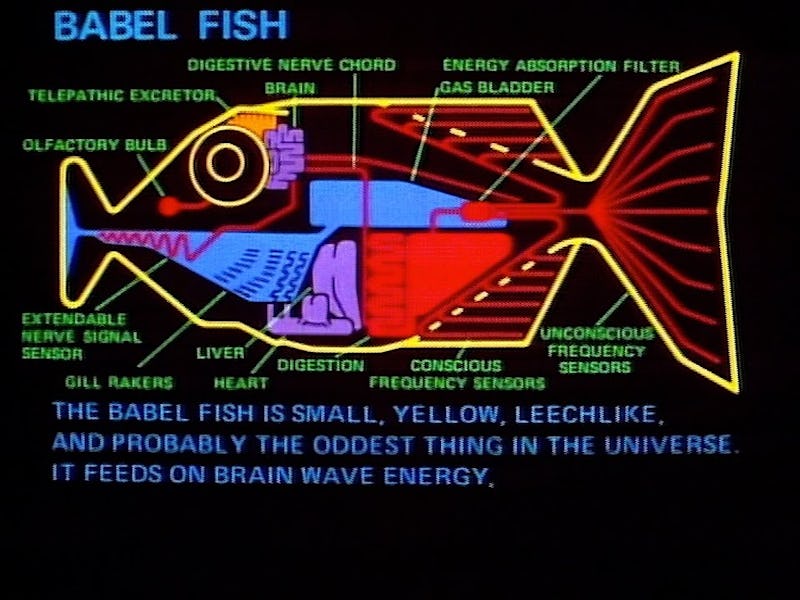

Even the first half of that question requires something akin to the babel fish, a creature in Douglas Adams’ The Hitchhiker’s Guide to the Galaxy that translates any noises into the host’s native tongue.

“The right mental model to have in mind is that these are modern day babel fish,” Shubo Sengupta, who works on A.I. research at Facebook, said recently at London’s Deep Learning Summit. “It’s kind of overselling it a bit, because you can’t go from any language to any language, but that’s the right picture, about taking one sequence and going to another sequence.”

Sengupta works as part of the Facebook Artificial Intelligence Research team, or FAIR. This is an ambitious group aimed at developing systems “with human level intelligence.”

Part of reaching this goal is about creating a machine that understands humans effortlessly, much like the babel fish starts translating by just slipping inside the host’s ear.

Think of all the varieties of ways to say something, and the number of possibilities involved, and the task becomes mind-bogglingly huge. Speech-to-text automatic speech recognition (ASR) takes up somewhere around 20 to 50 million parameters, while a translation system can take up more than 100 million parameters.

A lot of data needs a lot of power to crunch through and understand. ASR networks take 20 to 50 exaflops of computing power for training. A flop is a measure of how many floating point calculations per second a computer can handle, and an exaflop is a quintillion flops. In other words, these networks require a large amount of computing power, and on today’s hardware it means training runs take around a few months.

“We’re seeing a revival of this high-performance computing, powered by GPUs, just to train these centers,” Sengupta said.

Graphics processors, or GPUs, are good at completing multiple calculations at once instead of working through them in sequence, making them ideal for such tasks. GPU maker Nvidia has shined in recent years due to this. Where it dominated the nineties by offering realistic video game graphics, it’s found a new use by offering its computer chips for training artificial intelligence networks. At the 2016 Consumer Electronics Show, the company unveiled the Drive PX2 chip, designed specifically for powering A.I. inside cars, with around eight teraflops of power.

Advancements are also coming from the software side. In February, Baidu Research unveiled the Deep Voice text-to-speech system, which uses new techniques to syntesize audio from inputted text 400 times faster than previous systems. There’s a long way to go, though.

“Translation is the single biggest network for any large internet company,” Sengupta said.