At the Wednesday opening of Google’s I/O Conference, the world’s largest corporation unveiled a supercomputer powered by the company’s upcoming Tensor Processing Units (TPUs). It’s part of Google’s overall pivot toward A.I., but amid the excitement over machine learning, Google is framing the TPU as a game-changer.

So just what are TPUs? The custom-made artificial intelligence computer chips represent Google’s bid to create the tool that machine learning developers have needed for years and together they comprise a super-efficient A.I. supercomputer. TPU’s are on the same technology that was used to build the world champion AlphaGo A.I., and they could allow Google to begin fully realizing its A.I.-driven future.

Artificial intelligence is inarguably the most active area of coding research right now, and could realize the majority of the coolest projected software breakthroughs over the next decade. Whether it’s powering a Jarvis-like virtual assistant or just helping to improve the safety of a self-driving car, there will be a whole lot of machine learning over the next few decades. The computing world has been desperate to find a way to run these algorithms more efficiently, and thus more cheaply, than traditional computer processors are capable of delivering.

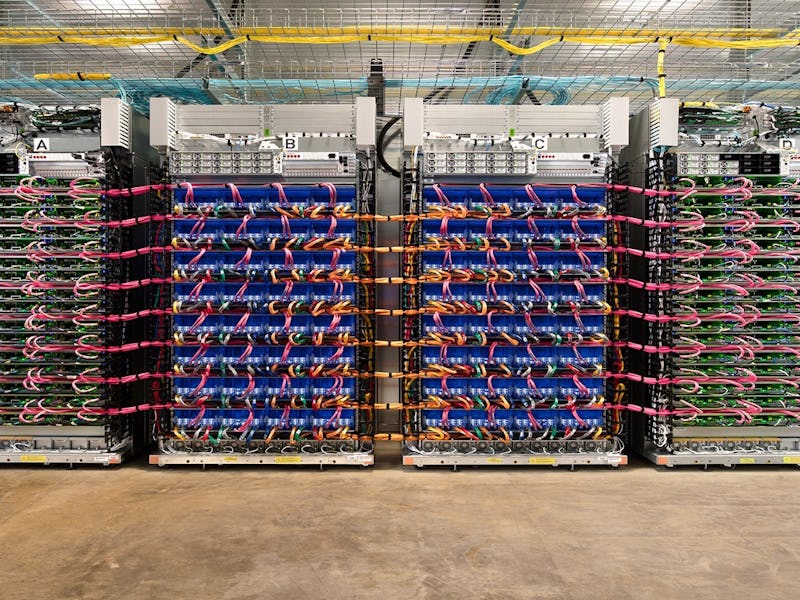

A Cloud TPU unit.

Facebook’s Big Sur and Big Basin servers are an example attempt, based around carefully networked GPUs, or Graphics Processing Units. GPUs are traditionally thought of as video cards, but the specific type of computation they do well (floating point calculations) just so happen to be crucially important to machine learning. GPUs have also come to prominence because they can more efficiently tackle the blockchain “hashing” problems associated with bitcoin mining.

Long story short: Google’s new TPU-based computers, called Cloud TPUs, are very good at floating point processes. Very good. Google claims they can deliver 180 teraflops of floating-point performance, and that when they’re networked together into a 64-Cloud TPU supercomputer called a TPU Pod, this leads to speeds up to 11.5 petaflops. To put that into perspective, Facebook’s above-mentioned Big Basin servers deliver just 10.6 teraflops each, less than a tenth of one Cloud TPU.

Power is the Key

As mentioned, though, the important part is electrical efficiency. It’s not good enough to be able to do a lot of work, you’ve got to be able to do it for very little power, and while Google hasn’t detailed wattage numbers for the Cloud TPU, it has previously released figures for the TPU in general. According to Google, its TPUs can offer a 30- to 80-times increase in floating point operations per watt of power used — and if that carries over to their 11.5-petaflop TPU Pod, that really could have an absolutely enormous impact.

It’s not just that Google and other large companies will save a whole lot on their power bills by making their processes more efficient (Google is reported to have used about 4.4 million megawatt-hours of electricity in 2014). It’s that with these savings in hand, it’s possible for major companies to deliver far more ambitious machine learning services.

Practicality Matters

As mentioned, the famous AlphaGo go-playing A.I. did its thinking mostly with TPUs, but the A.I. code itself could have been executed on any sufficiently powerful machine. TPUs weren’t necessary for AlphaGo to exist in theory, but they were necessary for it to exist in the real world, with realistic demands on budget, network speed, electrical current, and even physical space in the play area.

In the same way, none of the incredible machine-learning based services of the future will be made fundamentally possible by the TPU — but the TPU could be the invention that lets them come into existence anyway. It’s only a stepping stone to a truly A.I.-tailored computing future, but an important one.

It could begin today.