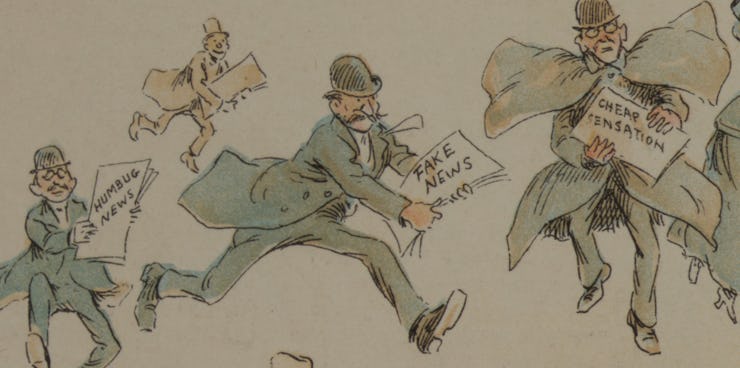

Artificial Intelligence is Going to Destroy Fake News

But A.I. can also cause the volume of fake news to explode.

With the rise of email came the rise of spam filling inboxes. Email has become sophisticated faster than spamming technology and now, the internet’s junk mail is often caught in a folder; out of sight and out of mind are messages with the subject line “Kindly get back to me urgently” and the greeting “Dear Beneficiary.”

There’s good news for anybody who sees fake news — not the sort that’s simply true but politically difficult for the president; but actual, fake, conspiracy theory-baiting chum — as another form of spam.

At least that’s what Dean Pomerleau, research scientist at Carnegie Mellon University’s Robotics Institute, said recently during a panel in New York on the proliferation of fake news. We solved the spam problem using artificial intelligence, he argued, and with A.I., we can solve the problem of fake news by filtering out credible news from the misinformation. Wheat from chaff, etc.

Also on the panel, put on by the New York Daily News Innovation Lab in Manhattan, was CNN political commentator Sally Kohn, who was well aware of her network’s reputation as purveyors of fakery over its coverage of the notoriously sensitive President Donald Trump.

“According to half the country, that means I’m an expert on fake news,” Kohn said. “As a citizen, I’m invested in facts. As a journalist, even an opinion journalist, I’m grounded in facts.”

At the panel, Pomerleau spoke about ways A.I. can combat fake news. He co-directs the Fake News Challenge, a competition to create a fact-checking tool. The idea for the challenge started shortly after the election. So far, there are almost 200 teams signed up and 300 people who registered for the Fake News Challenge Slack channel.

“We were speculating among friends, what could we as machine-learning people do to improve the situation moving forward? That was the genesis of the idea,” Pomerleau tells Inverse.

Even the challenge problem for the annual International Conference on Social Computing, Behavioral-Cultural Modeling, & Prediction and Behavior Representation in Modeling and Simulation is fake news and propaganda. “Simulation studies, data science studies, machine learning studies, and network science studies are all encouraged,” the prompt announced when it was posted recently.

Inverse asked Pomerleau just how fake news will be killed and here’s what he said.

How do we see A.I. creating fake news in the future?

I think in the near future, technology is likely to help in the creative process. It won’t be too long before generated video can be created, very much like PhotoShop. Those two things together will really undermine our ability to believe what we see. Image processing and audio processing tools will foster easy creation by just about anyone to create fake news.

How can A.I. fight fake news?

Using smart filtering and content analysis and natural language processing — these can all be used as signals to an algorithm that’s attuned to detect fake news, just like we have filters for spam to prevent it from getting into your inbox. There are ways A.I. can assist humans in identifying fake news, or in the future, do it automatically.

What are some ways the Fake News Challenge has addressed fake news so far?

We kicked it off as a casual wager on Twitter, thinking naively we can jump to the end stage and build a system that can classify real news versus fake news. It turns out it’s a much more subtle problem than that. You run into problems like opinion pieces or satire that falls into a gray area that makes it a much more challenging task that so far requires some human judgment.

We backed off the task of trying to predict the fake versus real distinction to focus on a tool to help fact-checkers by solving a problem. It will allow fact-checkers or journalists to gather the best stories on both sides of an issue. By gathering those pro-con arguments quickly, human fact checkers will be able to quickly assess what the truth is and debunking things that are clearly made up.

How can A.I. help the average user who might read or run into fake news?

We’ve actually started brainstorming about how the kind of tool we’re building can assist the average individual rather than news organizations. Suppose you read an article that makes a claim about a fact in the article. You can imagine that if you highlight it or click a button, you can use our detection tool that finds other content on the internet that takes the pro or con stance and find out just how credible the claim is in the story you’re currently reading.

How might tech companies respond to fake news?

I would love to see a more concerted effort for tech companies. They’ve set up industry groups for other things. They recently created partnerships for A.I., and I’d like to see them do something similar and do a best practices industry group to address fake news.

I think one of the biggest problems is the economy has made it quite lucrative to game the system and get more clicks and eyeballs because that’s how you make money on the internet now. The model that tech giants have created has seriously undermined the news media industry to make it almost incumbent for news media creators to create tantalizing headlines that get people to click on them for whatever reason.

[The Fake News Challenge has] caught the attention of well-meaning people who see fake news as a big problem and use their machine learning skills and try to address it. I’ve been a little disappointed that the tech community and machine learning community haven’t faced up to the responsibility of using their skills in development to benefit society.

One of the reasons we’ve attracted so many smart people from the tech community is it does offer the opportunity to use some of the cutting-edge machine learning and natural language processing work in A.I. to do something for the social good right here and right now.

This interview has been edited for clarity and brevity.