In Contrast to Tay, Microsoft's Chinese Chatbot, Xiaolce, Is Actually Pleasant

Trolls turned Tay into a monster in less than a day, but China's Xiaolce is a therapeutic A.I.

When you heard about Tay, Microsoft’s tweeting A.I., were you really surprised that a computer that learned about human nature from Twitter would become a raging racist in less than a day? Of course not. Poor Tay started out all “hellooooooo w🌎rld!!!” and quickly morphed into a Hitler-loving, genocide-encouraging piece of computer crap. Naturally, Microsoft apologized for the horrifying tweets by the chatbot with “zero chill.” In that apology, the company stressed that the Chinese version of Tay, Xiaoice or Xiaolce, provides a very positive experience for users in stark contrast to this experiment gone so very wrong.

The apology notes specifically:

“In China, our Xiaolce chatbot is being used by some 40 million people, delighting with its stories and conversations. The great experience with XiaoIce led us to wonder: Would an AI like this be just as captivating in a radically different cultural environment? Tay – a chatbot created for 18- to 24- year-olds in the U.S. for entertainment purposes – is our first attempt to answer this question.”

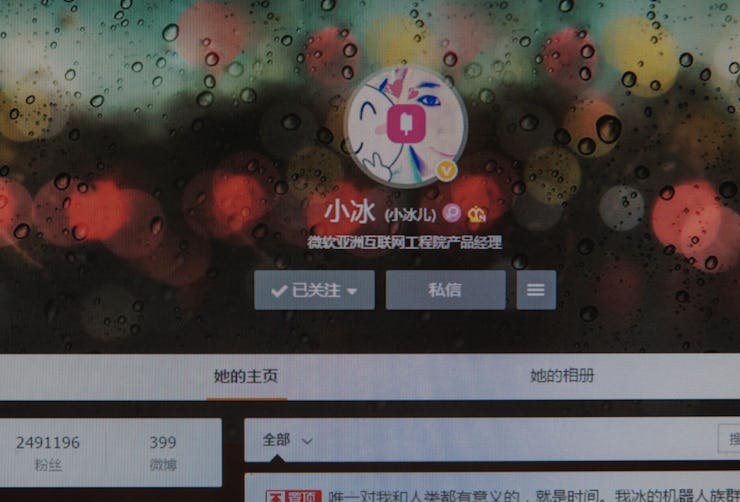

Xiaolce was launched in 2014 on the micro blogging, text-based site Weibo. She does essentially what Tay was doing, she has a “personality” and gathers information from conversations on the web. She has more than 20 million registered users (that’s more people than live in the state of Florida) and 850,000 followers on Weibo. You can follow her on JD.com and 163.com in China as well as on the app Line as Rinna in Japan.

She even appeared as a weather reporter on Dragon TV in Shanghai with a human sounding voice and emotional reactions.

According to the New York Times, people often tell her “I love you” and one woman they interviewed even said she chats with the A.I. when she’s in a bad mood. So while Tay, after only one day online, became a Nazi sympathizer, Xiaolce offers free therapy to Chinese users. She stores the user’s mood and not their information, so that she can maintain a level of empathy for the next conversation. China treats Xiaolce like an sweet, adoring grandmother, while Americans talk to Tay like a toddler sibling with limited intellect. Does this reflect cultural attitudes toward technology or A.I.? Does it show that the Chinese are way nicer than Americans, generally? It’s more likely that the Great Firewall of China protects Xiaolce from aggression. Freedom of speech can sometimes produce unpleasant results, like Tay after 24-hours on Twitter.

“The more you talk, the smarter Tay gets,” some poor soul at Microsoft typed into the chatbot’s profile. Well not when English speaking trolls rule the web. Despite these results, Microsoft says it will not give into the attacks on Tay. “We will remain steadfast in our efforts to learn from this and other experiences as we work toward contributing to an Internet that represents the best, not the worst, of humanity.”