Face the Music: How the "Eye Conductor" Helps Paralytics Rock the Fuck Out

Interaction designer Andreas Refsgaard wants the disabled to be able to express themselves -- loudly.

Andreas Refsgaard, an interaction designer and creative coder living in Amsterdam, has designed a musical interface that allows people with physical disabilities to make music. The Eye Conductor uses eye tracking software and a computer’s webcam to translate facial movements into different components of musical composition. Users can train themselves to play the thing with their face, which means paralyzed people — people who can no longer play instruments — can have a second chance at making beautiful (or just loud) sounds. The right gaze literally strikes a chord with Refsgaard’s invention. The right expression expresses itself out loud.

Refsgaard spoke to Inverse about the motivation behind his project, the struggles he ran into along the way, and what he learned what he gave people who’d lost the ability to make music another chance.

What is your background with music?

I wouldn’t call myself a musician because I only play for fun, but I play guitar and bass. Ableton is a music program, a digital work station that’s similar to recording softwares like Logic or Protools or Garage Band. I took a course on it, and I wasn’t that great at making music with it but I was fascinated by the possibilities I saw. Then we started to collaborate and do some more artsy installation-type stuff where he would design the sounds and I would hook up sensors, for instance a depth camera so when people walked past, they would be controlling some aspect of the project.

So you’re an interaction designer and creative coder. Could you explain what those titles mean?

All the things I design are interactive. So if I were to make some sort of furniture, then it would have some functionality that would make it react to something. I’m also a creative coder. Compared to a lot of other people — for instance I studied in Copenhagen at the Copenhagen Institute of Interaction Design where people come from a lot of different backgrounds — I’m a bit more toward the coding side. I also call myself a “creative coder” because I’m not a proper coder. I’m not that good at it but I can do some stuff really fast and I can connect things and I’m good at coming up with novel ideas.

What was it that inspired you to help people with physical disabilities make music?

After high school for two or three months I was a caretaker for someone with muscular dystrophy. Being with him all the time made me realize some of the things that I couldn’t help him with. One of them was expressing himself in a creative way. It wasn’t that I thought, “Okay here’s a problem I need to solve,” because at that time I was young and didn’t have any professional skills. But it stuck with me.

What about in terms of technical inspiration?

A project called Eyewriter I saw about a year ago is a painting program that was designed for a guy who used to be a graffiti artist but then was paralyzed. Some of his friends were coders and made a tool for him with eye tracking that made him able to draw. And then since I had been playing around with sensors and stuff in music software, I made a connection. I did it as my final project at school.

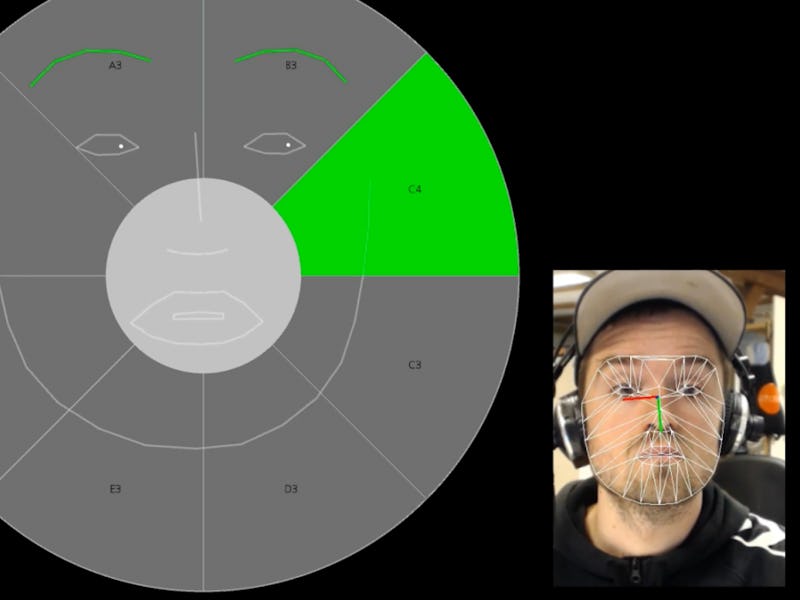

Someone testing out the Eye Conductor

How did music end up being the creative field you wanted to explore?

I think perhaps because I enjoy music a lot myself and in terms of the time I had for the project. It just became clear that music was what I wanted to work with. Then I was very inspired by Eyewriter in terms of making graphics.

Music seems like a great choice because it’s universal. I can’t think of anyone who doesn’t like music.

In the beginning I actually wanted to test out how important music was for the group I was designing for. The early steps were just observing how people with different physical disabilities interact with music. One home I visited had Music Thursdays. Some can point a bit or play a little on a keyboard, and some can’t do anything at all, but the fact is they show up for Music Thursdays.

Between observing and then making the device, how long did it take?

Often in a design process those would be two separate things, but I don’t like that approach. I like building from the very start and then when I have conversations about what I’ve built. And then I modify or refine it.

What was it like to see people using it for the first time?

It was quite nice, yeah. Because some of the people couldn’t speak, the connection can be a hard. But then when they used my Eye Conductor, we really connected. They were looking at me and smiling or acting really happy or when I just demo’d it they were really focused. It was a beautiful example of the way music can help people interact and express emotion.

A photo from Refsgaard's research stage, during which he visited several homes for the physically disabled

Without getting too technical, what was the biggest problem you ran into while you were designing the Eye Conductor?

For the system to work you have to sit in the same pose as we’re sitting right now in front of our laptops because the image you see of me right now is straight on and good for tracking my face and eyes. But if you’re with someone in a wheelchair who doesn’t have control over their posture or their back or neck, for instance, then sometimes they would sit more sideways and the system couldn’t track their facial movements. And then sometimes it would be a bit uncomfortable for me to try to say, “Ok for this to work you have to sit straighter.” And then with them being non verbal, how do we communicate this?

If someone’s head was tilted like that, could you theoretically hold the laptop in a way that picks up their facial gestures?

Yeah. So I didn’t really go into solving that issue but a lot of people have computers mounted to their wheelchairs and the computer is on a pole and then on that pole there’s a mounting device and they angle it in the right direction to point at the person’s face. There’s no position that’s right or wrong, it’s just about getting the sensors — which is the webcam and an eye tracker — to be straight on for the head. That was a bit challenging. It was more of a hardware thing because otherwise I would have to try and build a structure and that would be messy. I’m not so good at that.

This screenshot shows how the program uses facial tracking to produce sound

Did you do the whole thing by yourself or did you run into technical issues that only other people knew how to solve?

I did it by myself but on the sound design side I got help from that professor I told you about. Basically just sound design stuff. I also had an advisor who gave me some pointers in terms of a programming language called Max, which he was really great at. He just helped me to be more comfortable with certain programming details. Of course a lot of it was new but the basics of it I already knew from previous experience. I made the Eye Conductor in eight weeks and people said, “Oh that’s crazy how can you make that in eight weeks?” But I made some other stuff that’s also advanced in terms of working with sensors and music software and that took way longer because I didn’t know how to do it. Now that I know it’s not as difficult, even though this project was more complicated. It’s also important for me to point out that it’s a fully working prototype but it’s not a product. So people can’t go out and buy it and install it on my computer.

I was going to ask you if you have plans to sell it?

I actually don’t want to sell it, I want to give it away. I want to make it an open source thing but I can’t promise anything now. I don’t have any funding for it yet and I have work and other things to do. I got a message from a guy in Michigan for instance who used to be a producer and then he got ALS. I gotta make it somehow available to all these people. It’s very important for me to at some point, and I can’t promise when but hopefully quite soon, package it in a way so that people can either download it all as one thing and it would run on most computers or actually try it out in the browser. The idea behind making it a browser thing would only require people to have a computer with online access and then have whatever replacement for a mouse that they might have on their computer. If I make it open source I can provide the basic demo and then people can add their own twists to make it their own.

Another person testing out the Eye Conductor

It’s nice to hear that you’re doing this because you want to help people and not because you want to get rich or something like that.

The tools I built it with — at least the working prototype — are open source. Why would I then get the credit? For example the face tracking system that I use for my prototype which enables people to create a sound by raising their eyebrows or opening their mouths, that’s a thing called FaceOSC made by a guy named Kyle McDonald and that’s an open source system. I don’t think I would have gotten as far as I did without that piece of software.