Google's A.I. Beats Facebook in Race to Win at the Ancient Game of Go

It was unthinkable just a few years ago that artificial intelligence could win at the ancient game.

A little before midnight Pacific time on Tuesday, Mark Zuckerberg launched a preemptive strike against his competition at Google with a pronouncement that he likely knew was wrong:

“The ancient Chinese game of Go is one of the last games where the best human players can still beat the best artificial intelligence players.”

No doubt Zuckerberg knew that about twelve hours later, Google would trumpet that well, actually, its artificial intelligence had beaten a human player, and not just any human, but one of the best in the world. Google’s lauded achievement — the results of which were published in the journal Nature on Wednesday afternoon — is that its DeepMind researchers had developed AlphaGo, an artificial neural network, which beat Fan Hui by a score of five matches to zero.

Meanwhile, Zuckerberg wrote in the same post Tuesday night that Facebook is getting close: “Scientists have been trying to teach computers to win at Go for 20 years. We’re getting close, and in the past six months we’ve built an A.I. that can make moves in as fast as 0.1 seconds and still be as good as previous systems that took years to build.”

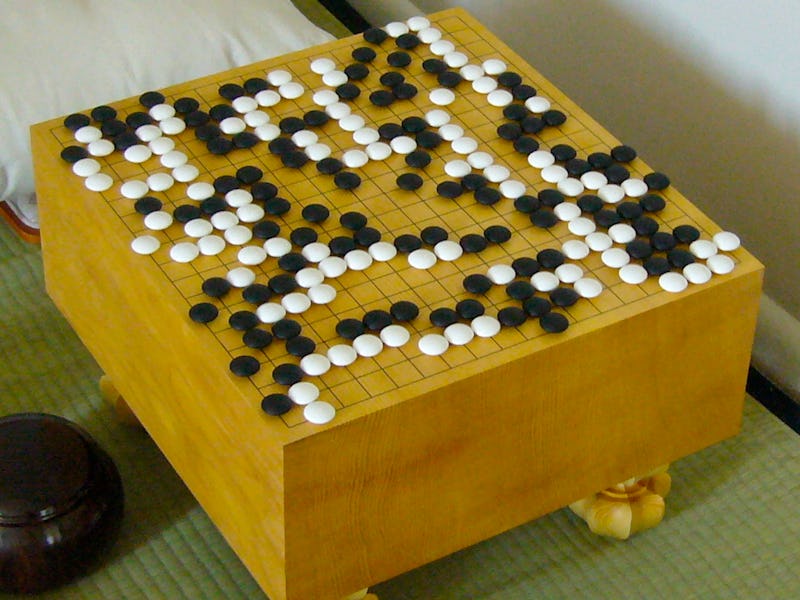

The game of Go is older than the story of Jesus, but remains notoriously tough for computers to beat. Algorithms crush humans at tic-tac-toe, chess, and checkers, but the sheer number of moves in Go (more than there are atoms in the universe) has been too much for computers to brute-force their way to victory. Code couldn’t best human Go champs — until now.

For decades, computer scientists have considered winning the game something of an A.I. acid test. Google’s AlphaGo is ahead of the curve, according to some experts, by ten years. Unlike the [Kasparov-killer Deep Blue](https://en.wikipedia.org/wiki/DeepBlue(chess_computer), which could crunch through the possible moves and select the best, Google’s algorithm is an advanced system that combines predicting the opponent’s moves and machine learning.

From Google’s official blog:

We trained the neural networks on 30 million moves from games played by human experts, until it could predict the human move 57 percent of the time (the previous record before AlphaGo was 44 percent). But our goal is to beat the best human players, not just mimic them. To do this, AlphaGo learned to discover new strategies for itself, by playing thousands of games between its neural networks, and adjusting the connections using a trial-and-error process known as reinforcement learning. Of course, all of this requires a huge amount of computing power, so we made extensive use of Google Cloud Platform.

Now, this might feel like a lot of hullabaloo over whose computer can swing its A.I. dick harder at an ancient board game. But the end-game is to take the underlying principles behind these programs and lay the foundation for more generalized machine learning.

Zuckerberg invoked A.I.’s ability to tackle environmental problems and analyze disease. At a press conference, the Google’s head AlphaGo programmer said a likely first application of Google’s new technology would be product recommendations.