Bee Vision Is Helping AI Researcher to Get Tiny Drones to Land Autonomously

The buzz on single-camera drones.

You can make drones incredibly small — Harvard University’s Robobee weighs 80 milligrams, or a third of a dime — and you can have unmanned vehicles fly autonomously. But you can’t yet have the tiniest drones fly without human guidance. To pave the way for wee drones landing without human input, artificial intelligence researcher Guido de Croon, of the Delft University of Technology, Netherlands, has recently demonstrated how to get drones to land using no other sensor than a single camera — a step forward in minimizing the gear loaded onboard autonomous drones.

De Croon likens this to seeing with one eye — a deliberate biological comparison, as he’s looking to bumblebees and other flying insects for help. What a single camera lacks in depth perception it makes up using a phenomena called optical flow, as de Croon writes in the journal Bioinspiration & Biomimetics. It’s thought to be how bees can land on a flower even as the wind buffets them around.

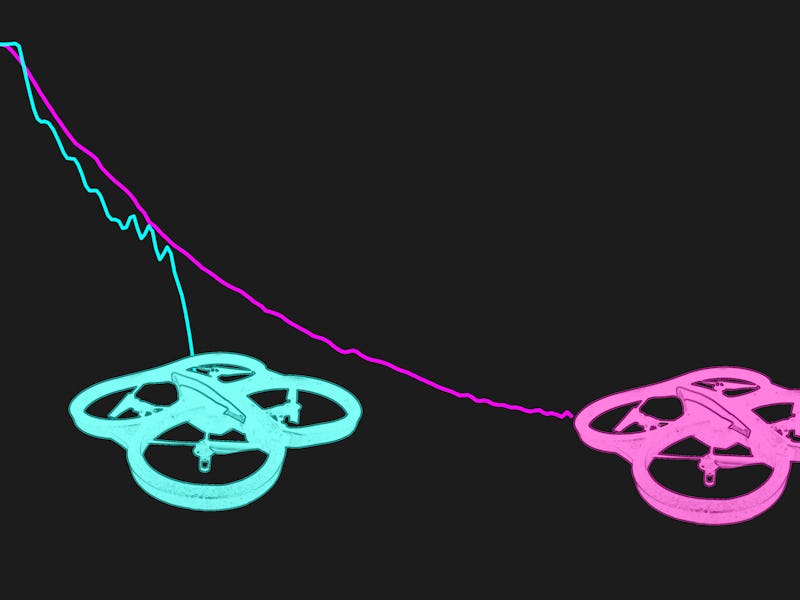

Optical flow works something like this. If you’re speeding toward Manhattan, the large buildings in distant view drift slowly, but the much closer highway signs whizz by. Insects rely on this relative motion to gauge their own speed and distance, writes de Croon, which allows them to slow down and land gently. For robots, relying on optical flow triggers oscillations when the robots get close to the ground; in the experiment published on January 7, de Croon demonstrated he was able to use the oscillations to tell a Parrot AR drone when it was safe to land.

Aping from nature to design robots isn’t exactly new, but expect to see more when it comes to drones. As, for instance, the University of Pennsylvania drone researcher Vijay Kumar notes, an eagle-inspired design allows a quadcopter to snatch a Philly cheesesteak out of the air (at around the 4-minute mark):