Lidar, one of the most popular technologies used in autonomous cars, isn’t a perfect system — and one self-driving car startup has decided not to bother with it at all. California-based self-driving car company AutoX has constructed a guidance system that relies only on cameras, and its founder Jianxiong Xiao says that it’s a way better value than the expensive sensors favored by Google and Uber.

“They [lidar sensors] actually do not satisfy functionality requirements for automotive hardware,” Xiao tells Inverse.

In short, Xiao believes that lidar has a low resolution for the price. It also struggles to get readings in rain or snowy conditions, Xiao says, and only really tells you how far away objects are. Xiao also said that the multiple-thousand-dollar sensors also have a short shelf life, and all together, just aren’t worth the trouble.

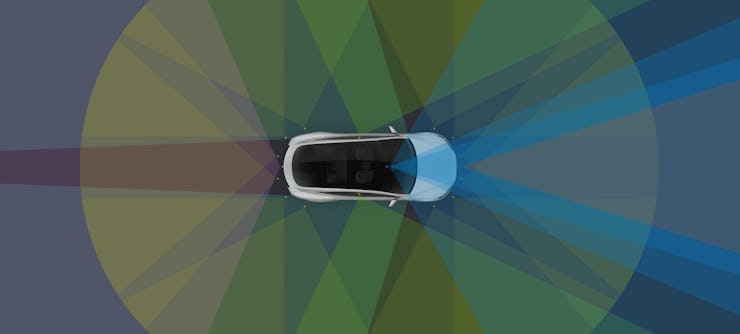

Lidar is an abbreviation that stands for Light Detection and Ranging. The system bounces laser beams around to work out how far away objects are. It uses the time taken for the beam to reach the object to determine how far away it is. In many autonomous car systems, the lidar sensor sits on top of a car. It doesn’t provide enough information to drive a car, but the data can help a system with other sensors make a more informed decision.

Lidar is popular with self-driving carmakers. Google used a $75,000 Velodyne lidar sensor in its first self-driving car prototype, along with a combination of GPS, camera, and a computer to work out the car’s location. In January, it was revealed that the company had managed to reduce costs on the sensors by 90 percent, which might address Xiao’s cost-effectiveness concerns some.

Uber is also using the technology. Waymo, Alphabet’s self-driving car company, is suing Uber for using a lidar system that looks suspiciously similar to its own.

A Velodyne LiDAR sensor mounted on a Ford Fusion autonomous development vehicle at CES 2017.

But Xiao isn’t the only one ignoring lidar. Tesla CEO Elon Musk skipped over the technology as well, claiming that a combination of cameras, GPS, and ultrasonic sensors should provide enough information to drive a car. Tesla plans to release a self-driving software update for some of its existing cars by the end of the year.

Xiao, along with Musk, doesn’t think lidar is the be all and end all of computer vision systems. He explains that the sensors have a lower resolution than even the cheapest cameras — 64 pixels vertically, compared to a VGA camera that has a vertical resolution of 480 pixels.

Lidar’s range is also limited by how powerful its beams are. Cameras can take in light from very far away, but lidar is designed to send out a beam and receive it back. That means the range is dependent on the power of the original beam.

“The power that sends the signal out cannot be too strong, because otherwise it will hurt people’s eyes,” Xiao says. “Therefore, it’s very important the range that you can see cannot be too far away.”

Xiao also says that lidar doesn’t cope well in extreme conditions. Hot and cold temperatures can throw off the sensor calibration, which could disrupt the data produced by the sensors. Xiao claims most lidar will fail to make it through a year.

“If we are talking about self-driving cars in the four years, five years, it’s going to be very difficult to have any automotive-grade hardware for the lidar perspective,” Xioa says.

In rainy conditions, the drops of water can also diffract the laser beam. A puddle of water may act like a mirror, shooting the laser off elsewhere. That means the beam may never make it back to the sensor, losing the information in the process. Cameras have a similar issue with rain collecting on the lens, but Xiao’s team has designed a wiper to clean the lens mid-driving.

With all this in mind, it draws into question why anyone would bother using lidar. But Xiao hasn’t totally written off using lidar: it all depends on whether the price is right. Lidar can provide useful depth information, and either way, having some overlap in collected data is better than having gaps that need filling. At this stage, though, why spend thousands of dollars on a sensor if a series of cheap cameras can do the job?

“Based on all these considerations and also, of course, the cost consideration, that’s why we choose a camera for the solution,” Xiao says.