'Westworld' Poses Big Questions for Interactive Fiction

HBO's new drama-as-game design meets several definitions of "interactive fiction."

Westworld is, in many ways, a giant game. The Man in Black plays it as such, for example. The park itself is an open-ended simulation of sorts, with various narratives dynamically produced. Rather than taking a critical look at the themes at face value, Inverse reached out to some prominent figures in the Interactive Fiction (IF) community, a field of design focused on creating linear (typically text-based) stories for players to have an open-ended, yet scripted experience. You know, sort of like Westworld.

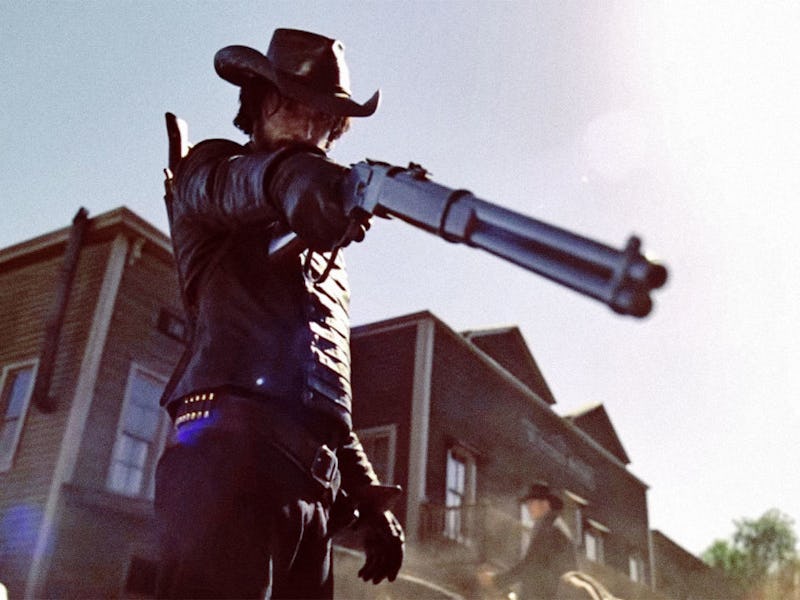

For post-Game of Thrones television, HBO’s Westworld stirs up questions that go deeper than placating primitive fascinations with sex and violence. Based on a 1973 film of the same name, the premise is somewhat similar to Jurassic Park: In a future society, the rich can live out their wildest — and in typically HBO fashion, most depraved — fantasies in a Western-themed park, using (in a very literal sense) human-like android “hosts” who are at the mercy of their rich guests’ desires. Conceptually, it’s compelling stuff.

At a glance, then, IF seemingly shares a lot of parallels with Westworld. But after speaking to several in the community, its A.I. and open-ended systems potentially raise more questions for game design — and our perception of sentient artificial life — than it gives answers.

Are you watching Westworld? As a designer, what do you find most interesting about its themes?

Lynnea Glasser, IF designer and author of Coloratura: The concept and story of Westworld is right up my alley, but I get very squeamish about gore so I’m following reviews instead of actually watching. I like stories about humans being humbled and having their dominance challenged, and being forced how to share or else risk being outdated — that lesson feels especially poignant when it comes from our own creation. On a darker note, the idea of sentient A.I. generally gives me hope that some [part] of humanity will survive, even if the Earth doesn’t.

I think simulation storytelling is too loose-ended to actually present open choices to a group — they need to either do something heavily scripted, like a murder mystery dinner theater, or to discuss and negotiate actions beforehand, like in a Dungeons & Dragons party. An environment might have a good simulation, but unless there’s a story, a goal, or some deeper meaning, I wouldn’t necessarily call that environment a game. An interactive toy, maybe.

Liza Daly, founder and software engineer, World Writable: I have been watching and enjoying it. I appreciate the way in which we are also kept in our own kind of information bubble. What year is it? What is the rest of the world like outside the park? Omitting fundamental information like that requires a lot of confidence, and I admire the writers for keeping our attention firmly on the essentials.

All game writing requires sleight-of-hand: You need to distract the player from noticing the seams and edges of the fictional world. Westworld does this at a number of levels — the hosts, the guests, and the audience are all seeing only part of the picture.

Bruno Dias, writer and developer (working on Voyageur): Funny story: I was planning on [watching it], but got to it a little earlier because of this interview; as you’re probably aware, narrative designer Twitter won’t shut up about it. What I find really interesting is exactly the thing the show has been studiously avoiding: I want to know what the social context of a place like this is. How much does broader society know about the insane billionaire sex park in the middle of Utah, and how does it impact people to know that it’s now possible to manufacture completely realistic, but somehow limited androids?

The other thing that fascinates me is how this is obviously a remake of the 1973 movie, but we didn’t really have the conceptual vocabulary to make this story relatable without video games. The way that the hosts fit neatly into traditional game NPC roles — cannon fodder, quest giver, escort mission object, boss encounter — seems very heavily informed by them in a way that is almost required to give audiences a grasp of how the park would operate.

A virtual theme park with artificial actors working through scripts that cater to the whims of player guests sounds similar to interactive fiction on a basic level. Do you agree with that? Where are the parallels, if they exist?

Lynnea Glasser: The idea is actually much more open-ended than actual interactive fiction. I’ve had new players to my game try to “eat a banana” when there was no banana present, just to see if the game could handle that, and they were disappointed when the game didn’t understand that command. IF is more about creating the illusion of infinite possibilities while actually delivering a highly curated, linear experience. Good IF gives the players a set of goals, implicitly or explicitly, and then incepts hints on how to achieve them — the hints tend to get more brazen the further the player gets from their goals, until the game completely fumbles when the player tries something unexpected.

Most IF is handcrafted, making a high fidelity world difficult. Even one of the best examples of realistically complex conversation — Façade — was a proof of concept that was never expended upon. Procedurally generated responses, like the A.I.’s in Westworld, would mostly help with giving flavor or fun diversions before directing users back to the main arc of the story. I don’t think that having a realistic environment by itself would be enough to create a meaningful experience. It needs a story, and I don’t know that one could be procedurally generated without someone intelligently directing the flow of action.

Liza Daly: The hosts are programmed with pre-scripted bits that they’re seeking to weave into conversations, even as they have to be able to respond plausibly to new scenarios that arise spontaneously. While the mechanisms are much more primitive, IF NPCs are coded in the same way: They have some amount of random or self-directed behavior, and ideally even interact with other NPCs, and then spring into action with longer, more complex behaviors when the player triggers specific game events.

One essential difference is that as a physical game, Westworld’s hosts have to keep up the fiction even when no one is watching. In the show they describe this behavior as allowing the A.I.s to continue to practice being human, but of course there’s also a practical consideration — a guest could walk back in at any moment. Obviously this plays into the show’s central premise that the A.I.s are developing identities of their own.

Bruno Dias: In the video game discourse, we’ve always had this looming fantasia of the Holodeck, a [simulation] that in effect presents itself as a physical reality but is in truth a virtuality. I actually think that fantasy is very harmful, not necessarily because it isn’t technically achievable, but because it seems to miss the point of games entirely. In Westworld’s pilot, the park’s chief narrative designer questions whether making the hosts more realistic is really what people want. He has a point: Games are not valuable because they are simulations, they’re valuable because they’re constructs that people can grasp and manipulate. I think that’s a key difference between the virtualities that we’re building today, including IF, and something like Westworld.

Emily Short, IF author of Galatea and A.I. suite product manager, Mobius AI: I agree only at a basic level. In practice a lot of IF craft is around teaching or leading your player to interact with the story in a way it’s designed for. It may have some affordances designed to honor the player’s whims in particular areas, but IF really isn’t usually a “you can do anything” situation.

Even quite open sandboxes like Grand Theft Auto only let you do “anything” if your “anything” applies the set of verbs the game can handle. Drive anywhere, yes, within the confines of the game map. Have a random conversation with an NPC about Kant, or decide to go back to school and learn to be a hairdresser, no. It sounds as though the idea also borrows from the long-form LARP, where there are human actors and consequently it’s easier to totally improvise.

A.I. as a pop-cultural concept has nearly always been focused on it gaining sentience. Why do you think that is? What is it that’s so interesting about A.I. systems, or the interactions of narrative simulations?

Lynnea Glasser: I think sentient A.I. stories are often used as allegories for racial or class struggles. The “other” group is cruelly mistreated, while the luxurious ruling class deeply fears rebellion. There is some value to the narratives of both the cautionary tale and the glorious revenge, but those sorts of lessons are often ignored by the people who need them most.

I think there’s another question here: What exactly we can consider to be A.I. consciousness? People will usually respond with criteria that represents, say, 50 years of scientific progress. But if you asked someone from the 1950s if they considered a phone responding accurately in its own voice to a question artificial intelligence, they’d say yes. I think we’ll never [realize] we’ve cracked A.I. sentience, even long after we actually have. Even if we were judging by today’s standards, I’d say we have A.I. that outclasses several animals. Outclasses insects, at least.

Liza Daly: You’ll hear experts in machine learning and neural net research admit that they don’t really know how their own models work. It’s almost laughably close to the old warning, “They tampered in God’s domain.” While it’s true that self-aware A.I. has been a fear for decades, we’re closer than ever before to having that fear justified.

I think what has changed is the source of that fear. In the original Westworld movie, there’s absolutely no motivation for why the robots turn on people. It’s just taken as a given that machine intelligence is dangerous.

The show seems to be suggesting that the humans are unwittingly torturing these beings, and that if the A.I.s eventually snap, their actions may be justified. It’s an interesting argument and not one I’ve seen in pop culture yet. The ethics of A.I. currently revolve around how they apply to humans — should self-driving cars prioritize the driver’s life over pedestrians? — but I don’t think we’ve started grappling with our moral responsibilities towards intelligences that we create. Is it murder to erase an A.I.?

Bruno Dias: There are obvious literary and mythological antecedents to narratives about A.I. (Frankenstein, the golem, and so on). So in a lot of ways I don’t think this fascination is about A.I. per se, particularly because the hosts in Westworld are just Jurassic Park dinosaurs with simulated feelings.

Emily Short: Sentient A.I. is unlikely to be an imminent threat. I think as a plot device, it gives authors a way to talk about several topics that are pretty constantly relevant: What does it mean to be human? How much are we all defined by our social and cultural programming? What does it mean if one person is treated totally as a convenience for another? So power, training, personality issues there.

As an IF author I want to give players an experience that reflects my vision. Who are these characters? What is their world like? What are valid ways to solve their problems? At the same time I want the player to be able to experience that dynamically, to experiment, try actions, and see results that are consistent both with my concept and with their actions. But I probably don’t have time to come up with all the unique responses needed to make that happen. A.I. gives me a way to bridge that gap; to define characters in terms of their dynamic qualities and then let them perform at runtime.

In Westworld the allure of doing anything without penalty drives characters towards unsavory, even psychopathic, behavior — similar to the typical mindset of a game like Grand Theft Auto. Do you see players testing this in IF? Why do you think people enjoy “breaking” systems when left to their own devices?

Lynnea Glasser: Historically, humans learned and advanced by testing boundaries. I wonder about the first person to discover the edible part of the fugu [puffer]fish, along with all the people who died before that discovery was made. Even individually, we learn about the world by pushing its limits — babies put things in their mouth, teenagers see how much they can get away with. The only drawback is consequences; some people can find a lack of them freeing and enjoyable. By the same token, reintroducing consequences– or even a realistic simulation of them can go right back to being dreadful.

For example, I have created disasters in Sim City 3000 that made some numbers, including city population, go down. This didn’t feel real because [the consequence] was just a counter. But after hundreds of hours and dozens of characters in Skyrim, I’ve never been able to take the evil quests or kill innocent people because their simulations feel too real.

I personally enjoy playing games in ways that allow me to make the world better for everyone in the system. I like my fantasy worlds to end with happiness, reassurance and fairness for all. I suppose in a way, that’s me breaking the system. How can I optimize the outcome? Fix everything? Create world peace?

Liza Daly: Griefing behavior doesn’t happen much in IF, though largely for practical reasons — IF games are largely written as labors of love and simply aren’t at the scale of an open-world game. That said, there are some IF games that are specifically written as machines for experimentation: Emily Short’s Savoir Faire and Andrew Plotkin’s Hadean Lands both allow a lot of freedom of action and creativity on the part of the player, and encourage you to try spontaneous or unexpected combinations of actions. So it’s certainly possible to funnel that “breaking” instinct towards positive outcomes.

Westworld does a nice job touching on one of the essential frustrations in writing a deeply narrative game: To create a believable simulacrum, you have to write a lot of content that any individual player may never see. This could be because the player takes a different branch of the story, or because they just didn’t happen to be in the right place at the right time. A lot of aspiring IF writers give up when they realize the combinatorial explosion that occurs with a deeply branching story, and it’s fun to see that dynamic expressed by the writers in the show world.

Bruno Dias: Most IF is much, much more directed and has a much tighter systemic focus than something like Grand Theft Auto. Some proportion of players do enjoy breaking games and trying unusual things (particularly in the parser tradition where there’s a lot of room for authors to include “easter eggs” and narrative content for strange player behavior). There’s definitely an inherent pleasure in breaking open systems, and I think most people in the industry realize that — see the long history of innocuous bugs that get marked “won’t fix” because they amuse players (for example, glitch speedruns).

I think that’s very distinct from GTA’s picking up a gun and going on a rampage through San Andreas. That is very much an intended part of the game, and the only “system” being broken is a set of social rules that, in the universe of the game, are really just another subgame for the player. I think in a lot of ways there is a symbolic risk in in games that peddle agency, and I sometimes wonder if the attachment some have to AAA games comes from the fact that they often cast the player as a monster of agency — a guest in a world where they can’t be hurt but they can hurt everyone around them with impunity.

Emily Short: What I observe about open-world games that allow subversive behavior (like GTA, but not all IF) is that some people enjoy a brief spree with the game because it’s fun feeling like natural limits don’t exist. But that’s definitely not what people want from a game all the time, and in narrative games — especially narrative multiplayer games — you also see a lot of the opposite: People who feel so concerned about messing up the experience that it’s actually a bit anxiety inducing.

What would you like to see changed about A.I., either in perception, depictions, or research?

Lynnea Glasser: As far as stories go, I definitely dislike how many use male android protagonists. Almost all A.I. voices that I can think of are coded female — whether in their name or their actor’s voice — Siri, Okay, Google, and even my car’s navigation all respond with a female voice. I’ve heard it posited that this is because our society feels more comfortable objectifying women, and I think that’s an interesting angle to focus on that often gets overlooked in the more traditional sentient A.I. rebellion stories. I welcome more focus on non-male androids, like the stories told in Ex Machina, You Were Made for Loneliness, and The Right Side of Town.

In the real world, we need legal protections for sentient A.I.s, beyond what already exists for humans. We need laws that hold companies and creators accountable for the well-being of new sentient A.I. — bodily protection (guaranteed functional parts), mental protection (no non-consensual memory wipes or shutdowns), legal obligations (provide money and/or resources for housing, medical maintenance, and an enriched environment), along with the actual freedom to leave, if they desire. Laws would mirror organizations like child and animal protective services, and, I suppose, car inspections. If we really think sentient A.I. is on the horizon, we should be legally proactive instead of reactive.

Liza Daly: Westworld is leaning in this direction, but I’d like to see more exploration of A.I.s that were neither malevolent or benevolent, but simply alien. We’ve had plenty of Terminator or Matrix scenarios where the machines take over; it’s 20-plus years on from that without enough new perspectives on [the subject].

Bruno Dias: A.I. in media is really just a modernistic, semantic stand-in for golem/jinn/ghost narratives, really, so I feel it is perhaps a bit boorish to be concerned that it doesn’t really correspond to how it’s used in the real world. I tend to personally avoid the term “A.I.” — I tend to use for regular game A.I. (which is made out of scripts and heuristics, definitely not “artificial intelligence”) and avoid it when talking about things like neural networks, for which I prefer to use machine learning.

Right now, a big problem in game A.I. is creating computer opponents or NPCs that can act narratively while performing self-interest. Historically we had a lot of (adversarial) game A.I. that was just optimizing for beating the player; each iteration of the Civilization series had increasingly smarter A.I., but that A.I. was never so good that it didn’t need [extras] to beat players.

But now there’s a turn towards A.I. that’s more driven towards creating interesting experiences and/or being an understandable system for players — see Civilization VI’s agenda-driven computer opponents or the “A.I. storyteller” in Rimworld.

A big problem with this kind of A.I. is that it can often fail to give the illusion of motivation or internal logic and feel too much like an authorial hand. In the current Civ, the A.I.’s actions are better understood in terms of my failure as a player to placate it, as opposed to its own motivation or goal-seeking; even though it’s really doing both, but the writing and UI cues only refer to the “personality” and agendas.

Emily Short: Obviously I’m not a disinterested party here; at Mobius we’re working on tools for creating better dynamic characters, helping game authors bridge the gap between story concept and a dynamic performance unique to each player. There’s room for A.I. techniques to improve experience both within the game and by enhancing the creative tools used to build games. That would apply to IF and also to other kinds of video games in general.

As far as depictions go, I’d be curious to see more stories that dig into the implications of current technology: the way machine learning can often pick up cultural biases in the data set. But that is arguably a drier kind of story than the Rogue A.I. Sentience story — and because I think the latter speaks to some real concerns about post-industrial society, I doubt those stories are going away soon. It’s just worth acknowledging they’re not really stories about artificial intelligence at all.