Do androids dream of harmony with humans? They will if the British Standards Institute (BSI) has anything to say about it. The UK’s leading business standards organization has published a guide that outlines how robots and robotic systems should take ethics into account.

“As far as I know this is the first published standard for the ethical design of robots,” Alan Winfield, robotics professor at the University of the West of England, told The Guardian. “It’s a bit more sophisticated than that Asimov’s laws — it basically sets out how to do an ethical risk assessment of a robot.”

The catchily-titled BS 8611:2016, priced at £158 ($208), is aimed at manufacturers to help them consider how ethics may come into play in robot designs. The famous example, of course, is Isaac Asimov’s “Three Laws of Robotics,” but real-world robot ethics may have to have a more complex interpretation of artificial morality than those.

It would never hurt us.

Still, the BSI starts with the basics, like not designing robots to hurt people, the document explains how gray areas may arise — like emotional attachment.

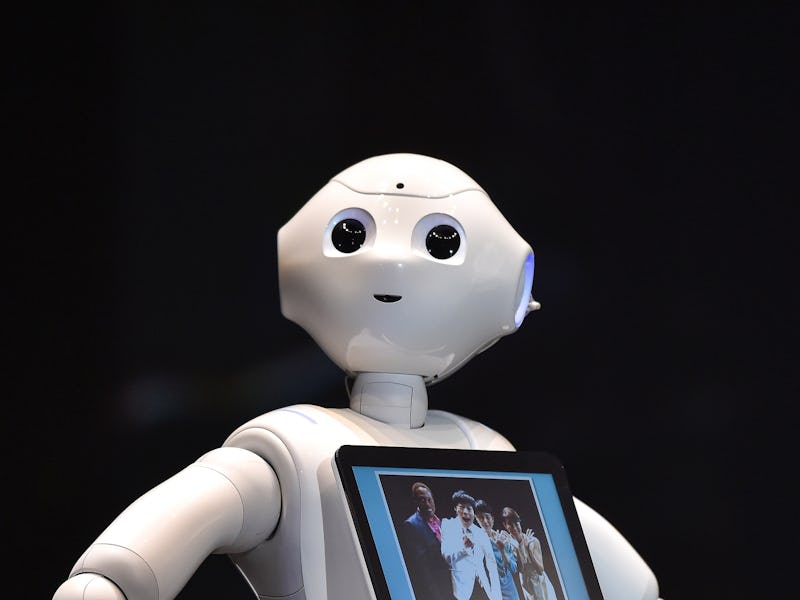

The BSI doesn’t dictate whether falling in love with a robot is okay, but explains that it may be an issue to consider. This could prove a point of contention further down the line, when sex robots fitted with artificial intelligence reach the market. For now, though, the BSI is more concerned that children could form bonds with robots. Kids may not be able to understand that the machines are inanimate objects.

The rules may seem like something of little concern until robots become conscious, but these decisions need to be made sooner rather than later. Earlier this month, Germany’s transportation minister outlined three rules that will come to define the country’s regulations around self-driving cars. The machines will not be able to discriminate based on age, for example, meaning that manufacturers must not program driverless cars to drive into specific groups of people, were it able to choose during an emergency.

Concerns like these will come into play more as time goes on and A.I. developers begin to grapple with how to teach robots certain value systems.