Tech

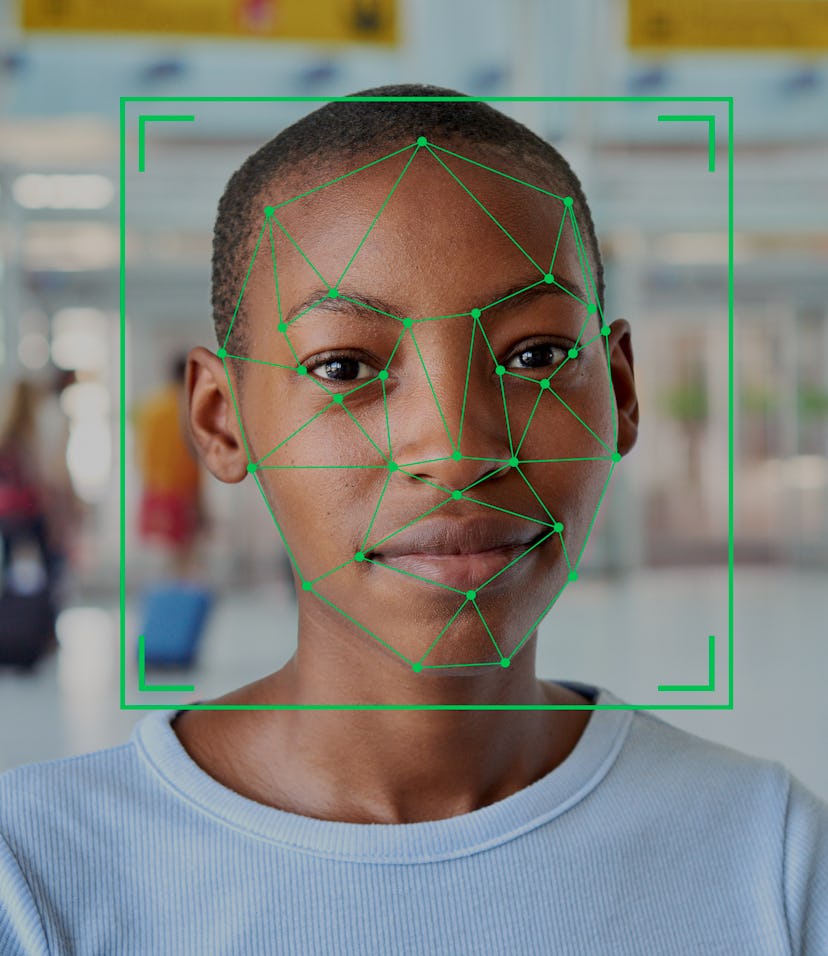

Clearview AI pushes false ACLU endorsement of its facial recognition accuracy

And the ACLU was not having it.

Clearview AI, New York’s own dystopian facial recognition software, is at it again. Trying to fend off bad (re: shockingly exposing) press, CEO Hoan Ton-That has asserted not only the legality of the company’s social media scraping but the accuracy of its AI as well. As part of its PR spiel, Clearview claims its highly accurate tests are based on the ACLU’s methodology used to test Amazon's Rekognition. On Monday, the civil liberty organization rejected Clearview’s accuracy claims to BuzzFeed News, citing the inherently flawed execution of the company’s test.

Why the claim is BS — When the ACLU tested Amazon’s facial recognition software, it supplied photos of sitting members of Congress to be matched against public arrest photos. The organization found that people of color were far more likely to flag false positives. In Clearview’s test, which also included local lawmakers from Texas and California, those images were set to be matched against nearly 3 billion photos.

Some of those images may have been of the lawmakers, allowing for greater accuracy, and many of the photos would be of far better quality than law enforcement officers usually have access to. The test also didn’t account for false positives or what happens when someone isn’t in the database. Even worse, no one on the panel that reviewed the test specializes in facial recognition.

In a post on the ACLU’s website, Jacob Snow, a technology and civil liberties attorney wrote:

Imitation may be the sincerest form of flattery, but this is flattery we can do without. If Clearview is so desperate to begin salvaging its reputation, it should stop manufacturing endorsements and start deleting the billions of photos that make up its database, switch off its servers, and get out of the surveillance business altogether.