Tech

YouTube considers removing the 'share' button on sketchy content

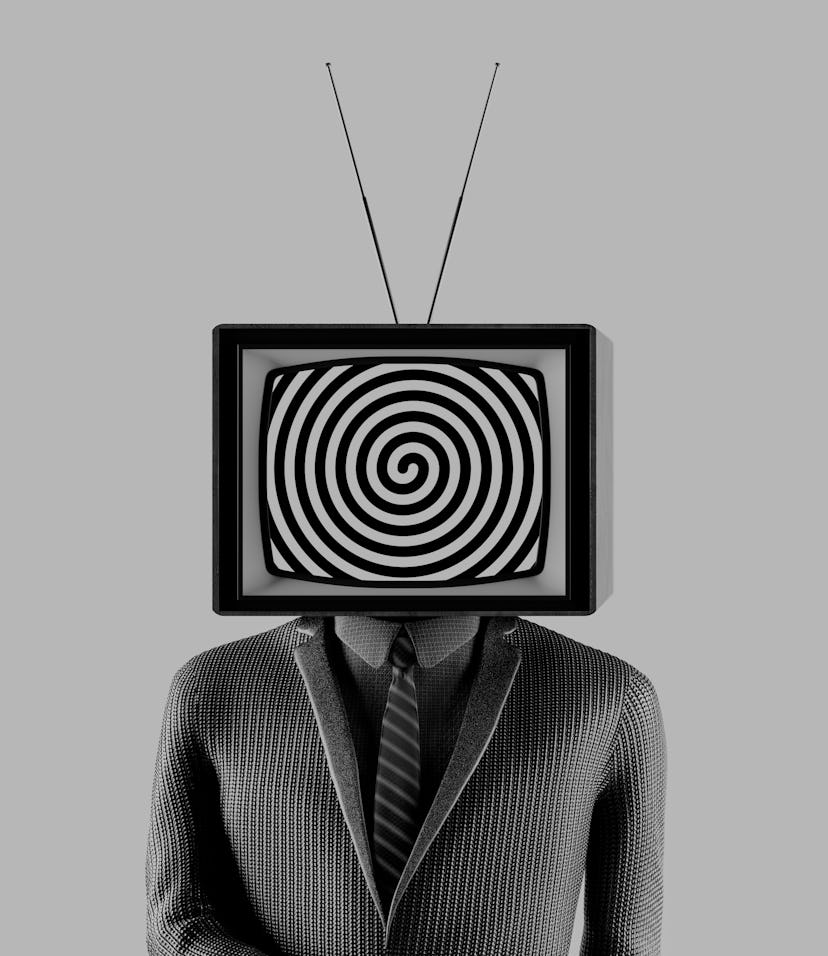

YouTube wants to curb misinformation, but it's not quite sure how to do so yet.

How do you solve a problem like misinformation? YouTube doesn’t have all the answers, but the sprawling online video sharing platform is determined to try. In addition to monitoring the spread of questionable content (like rogue “alternative historians”) through its own recommendation system, YouTube wants to address its role in monitoring videos that go viral after being shared to other platforms — such as videos buried deep in YouTube that make the rounds in Facebook QAnon groups.

In an official YouTube blog post, Chief Product Officer Neal Mohan shared the state of the company’s efforts to limit the spread of misinformation. In today’s rapid news cycle and constant tsunami of online content, YouTube can’t just train algorithms to catch flat earth theorists or 9/11 truthers or other proponents of well-established conspiracies. Misinformation is complex and varied, making it increasingly challenging to trace (let alone curb). Narratives can “look and propagate differently, and at times, even be hyperlocal,” which makes it difficult for YouTube to monitor content in the dozens of languages and over 100 countries in which it operates.

On the border — One particular challenge is what Mohan calls borderline content: “videos that don’t quite cross the line of our policies for removal but that we don’t necessarily want to recommend to people.”

Another moderation issue is fresh misinformation: flavors of falsehoods so new that there isn’t enough data to train YouTube’s systems. Amidst rapidly mutating theories or fast-breaking news events, unverified content can’t be immediately fact-checked. “In these cases, we’ve been exploring additional types of labels to add to a video or atop search results, like a disclaimer warning viewers there’s a lack of high-quality information,” Mohan writes.

But should the share button go?— In his post, Mohan weighed the positive and negative effects of one notable option: disabling sharing. Though YouTube has effectively stifled borderline content by removing it from its “recommended videos,” that content can spread when viewers share it to other places on the web, like social media.

“One possible way to address this is to disable the share button or break the link on videos that we’re already limiting in recommendations,” he wrote. “That effectively means you couldn’t embed or link to a borderline video on another site.”

Ultimately, like many other internet companies, YouTube doesn’t know how to stop misinformation — and it knows that upholding verity is an imperfect science. What is the truth? What is truth itself (the Stanford Encyclopedia of Philosophy’s definition is 15,000 words and still not definitive)?

Still, people are calling on YouTube to do more. Last month, 80 fact-checking organizations signed an open letter urging YouTube to do more to stop harmful misinformation and disinformation.