China Uses Big Data to Target Minority Groups, Says Human Rights Watch

Predictive policing enables discrimination.

The Chinese government is using predictive policing algorithms to target ethnic minorities in the province of Xinjiang, according to a Human Rights Watch report released Monday.

The province in northwestern China is home to 11 million Uyghurs, a Turkish Muslim ethnic group that has been discriminated against by the Chinese government in recent years.

Now, authorities are reportedly using big data to systematically target anyone suspected of political disloyalty. The push is part of the “Strike Hard” campaign, aimed at quashing potential terrorist activity in China. In practice, this has led to disproportionate policing of Uyghurs, Human Rights Watch says.

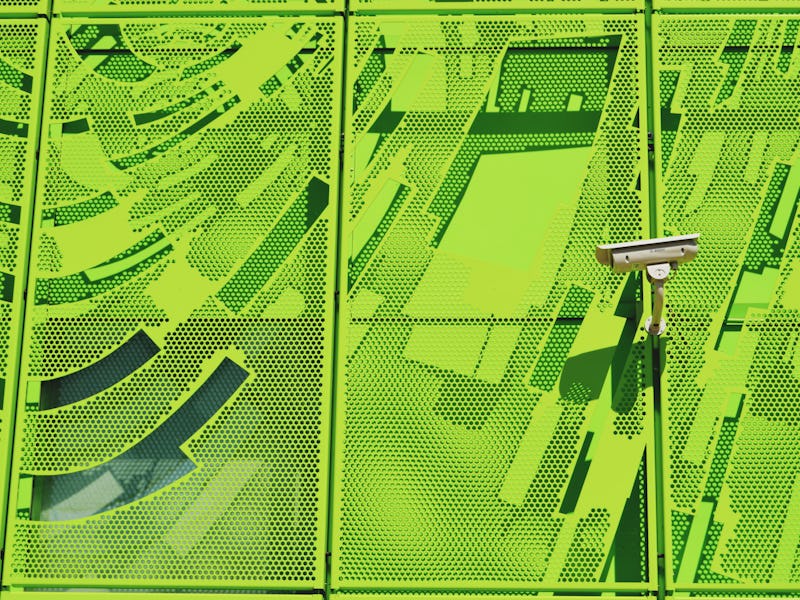

The predictive policing system, known as IJOP — Integrated Joint Operations Platform — is fed data from a variety of different surveillance tools. These include CCTV cameras, license plate and citizen ID card numbers obtained from security checkpoints, and a trove of personal information, including health, banking, and legal records.

In addition to automated surveillance, government officials conduct in-home visits to collect data on the population. A Chinese businessman shared a form that he filled out for IJOP’s records with Human Rights Watch — among other things, the questionnaire asked if the businessman was Uyghur, how often he prays, and where he goes for religious services.

All of these data inputs are used by IJOP to flag people as potential threats. When someone is flagged, police open a further investigation and take them into custody if they are deemed suspicious.

“For the first time, we are able to demonstrate that the Chinese government’s use of big data and predictive policing not only blatantly violates privacy rights, but also enables officials to arbitrarily detain people,” said Human Rights Watch senior China researcher Maya Wang.

According to the report, some of the people flagged have been shipped to political education centers where they are detained indefinitely without trial.

“Since around April 2016, Human Rights Watch estimates, Xinjiang authorities have sent tens of thousands of Uyghurs and other ethnic minorities to ‘political education centers,’” the report said. IJOP is lending credibility to these detentions by applying a veneer of objective, algorithmic analysis to the discriminatory arrests.

To make matters worse, the inner workings of IJOP are shrouded in secrecy.

“People in Xinjiang can’t resist or challenge the increasingly intrusive scrutiny of their daily lives because most don’t even know about this ‘black box’ program or how it works,” said Wang.

It’s the same problem that plagues most sophisticated machine learning systems: the decision procedures they employ are opaque even to the algorithm’s creators.

China’s use of IJOP is worth paying attention to because predictive policing is likely to proliferate as the technology improves. As Jon Christian pointed out at The Outline, predictive policing systems are already utilized in some places in the United States. The Los Angeles Police Deparment uses software that anticipates where and when crimes are likely to occur so officers can head them off.

On the other side of the criminal justice system, courtrooms sometimes use algorithms that give potential parolees “risk assessment” scores to help judges make more informed decisions. Unfortunately, these supposedly unbiased algorithms actually discriminate based on race.

China’s foray into predictive policing underscores the importance of responsible algorithm implementation as machine learning continues to enter the public sector. Perhaps it’s time to for tech-savvy governments to adopt a new mantra: Sometimes artificial intelligence creates more problems than it solves.